Why Experts Get It Wrong

Even when the expert is kind and clear, the mental trail they’re following can feel invisible to the rest of us.

Not long ago, I sat across from a tax consultant who clearly knew his stuff. He talked me through deductions, retirement contributions, and something called a “safe harbor rule.” He was patient, professional, and even kind about it. But as the conversation went on, I felt myself slowly drifting into a quiet kind of panic. I didn’t want to admit it, but I had no idea what he was talking about. It wasn’t that he was being unclear—it was that I couldn’t follow the mental trail he was walking, because I hadn’t been down that road before.

What struck me afterward wasn’t how complicated the topic was. It was how familiar this dynamic felt—how easy it is, when you know a lot about something, to forget what it’s like to not know. And how hard it is, when you're on the other end, to speak up and say, “Wait... I’m not with you.”

I’ve been on both sides of that conversation. I’ve been the confused one, nodding along just to keep the moment moving. And I’ve also been the one giving the advice—so sure I was helping, only to realize later that I’d skipped something crucial. Not on purpose, just... without noticing. And that’s really what this article is about.

It’s not a critique of experts or a rant against advice. It’s a reflection on how good intentions and deep knowledge can still miss the mark—and how that doesn’t make anyone a bad person. Just human.

If you’ve ever walked away from someone’s advice—whether from a trained expert or just someone who knew more about a topic than you did—feeling more confused than confident, or like something just didn’t quite connect, you’re not alone. And if you’ve ever tried to explain something you understand well, only to watch it fall flat, you’re not alone in that either. I’m writing this not because I’ve figured it out, but because I’m trying to. I’ve been on both sides, and I still get it wrong. But maybe, by exploring these blind spots together, we can find better ways to share what we know. Ways that feel clearer. Kinder. A little more human.

The Curse of Knowledge

The more familiar an idea becomes, the harder it is to notice what others still need to understand.

One of the trickiest things about knowing something really well is that it gets harder to imagine not knowing it. This is called the curse of knowledge.

I first learned about it through a simple but brilliant experiment by a Standford graduate student named Elizabeth Newton in 1990. She set up a game where one person would tap out the rhythm of a well-known song—like “Happy Birthday” or “Twinkle, Twinkle, Little Star”—using just their fingers. Another person would listen and try to guess the song.

Before the tapping began, the tappers were asked to predict how often their listeners would guess the song correctly. On average, they thought the success rate would be about 50%. In their minds, the melody was obvious—they could hear it clearly as they tapped along. But for the listeners, all they heard was a series of disconnected taps.

The real success rate? Only about 2.5% of listeners guessed the correct song.

It’s such a powerful reminder: once we know something—whether it’s a song, a concept, or a way of solving a problem—we don’t just hold the facts. We carry the experience of knowing, too: the shortcuts, the intuition, the invisible connections that make it feel simple to us. But that experience stays mostly hidden.

I think about that experiment all the time now, especially when I’m trying to explain something I know well. It’s so easy to assume that what’s obvious to me must be obvious to the person I’m talking to. Not because I’m trying to be arrogant. Just because it’s hard to remember what it was like before I understood it.

Experts—and really, anyone who’s spent a lot of time with a subject—carry that invisible melody with them. And without realizing it, they sometimes leave out the parts that would help someone new to the topic hear the song.

It’s not a failure of intelligence or effort. It’s just one of those very human blind spots. One that’s easy to fall into, and hard to notice in ourselves until someone points it out—or until we find ourselves on the confused end of the conversation.

Fixation Bias

The more we rely on what’s worked before, the harder it becomes to notice when something simpler is right in front of us.

Fixation bias is the habit of getting stuck on familiar solutions, even when a new or easier answer is available. Psychologists often refer to this as the Einstellung effect—Einstellung being a German word that means "setting" or "installation." In this context, it points to the way our thinking can become locked into certain patterns, or a mental set, without us even noticing.

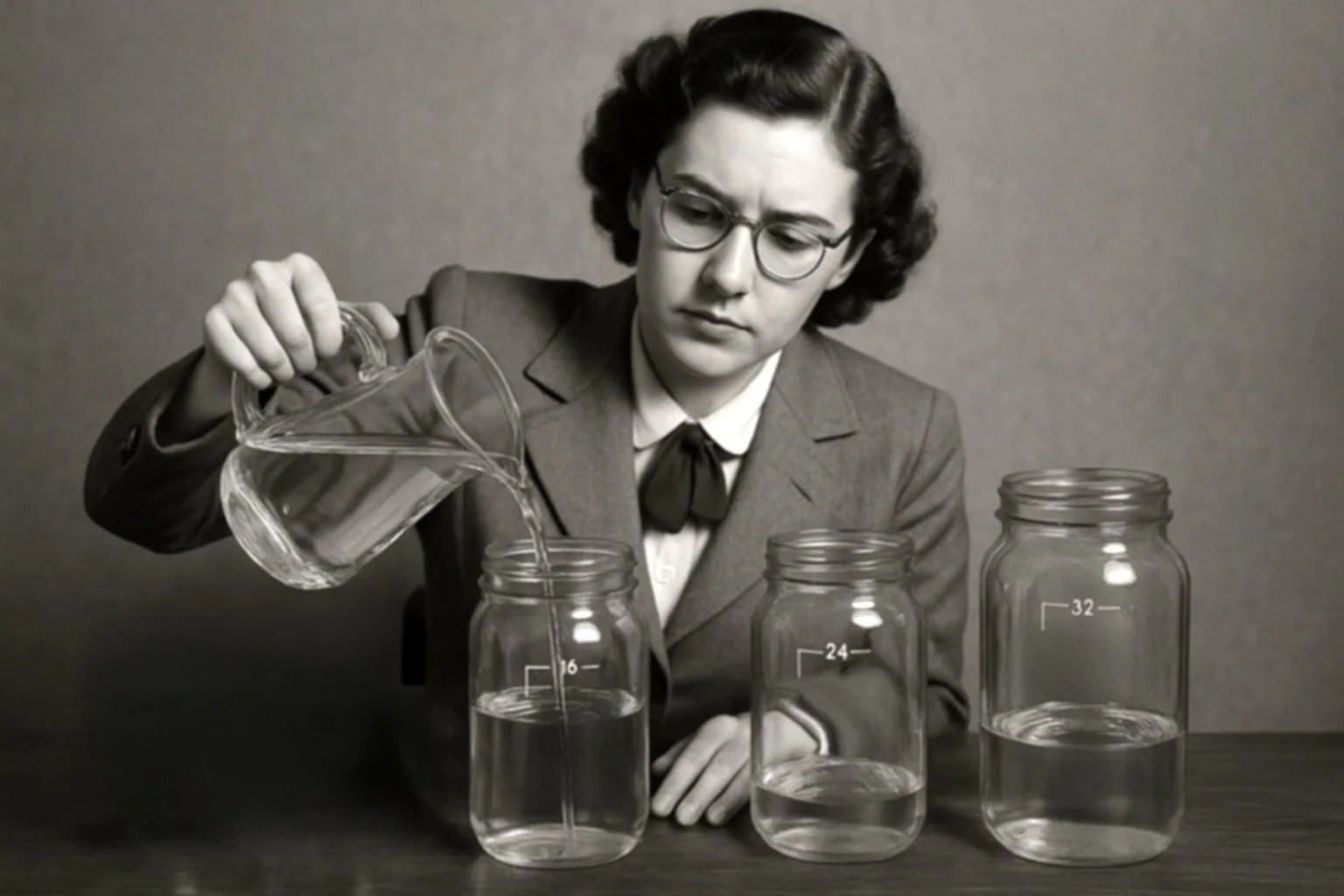

This bias was identified through a series of experiments by Abraham Luchins in the 1940s. In one version, participants were given a set of water jars of different sizes and asked to measure out a specific amount of water. Early problems required a somewhat complicated solution—several steps strung together just to get the right amount. Over time, participants learned this complex method and grew comfortable using it.

Then came a shift. Later problems could be solved in a much simpler way—just one or two steps. But most participants didn’t notice. They kept using the more complicated solution they had practiced before. Their familiarity with the old method made it hard for them to even see the easier path.

What stands out even more is how some participants reacted when the simpler solution was pointed out. There was resistance. Discomfort. Even a bit of defensiveness. It wasn’t just about missing the easier answer; it touched something deeper—a feeling that maybe their earlier effort had been wasted, or that admitting a simpler way meant admitting a mistake.

It happens in everyday life whenever someone with experience—whether an official expert or simply someone with more knowledge in a particular area—leans too heavily on familiar strategies. Without meaning to, they may offer advice or solutions that are more complicated, rigid, or outdated than the situation actually requires. Their experience, while valuable, can sometimes make it harder for them to recognize when a different approach would serve better.

For those of us seeking help, it can leave us following advice that feels unnecessarily complex or poorly suited to our circumstances—not because we misunderstood, but because the person offering guidance was operating from a mental set – an Einstellung - shaped by their past successes. Familiarity can be reassuring, but it isn't always the best fit for the present need.

I recognize this more clearly now because I’ve felt it in myself. I’ve clung to complicated methods and ideas long after simpler, better options were available—not because I wanted to be difficult, but because those old ways felt safe. They had history behind them. Letting them go felt like letting go of part of my own effort and identity, even when the change would have made things easier.

Fixation bias reminds me that experience is valuable, but it can also be limiting if we aren't careful. Even the paths that served us well once might not always be the paths we need now. And noticing that—letting ourselves be open to it—is harder than it sounds, whether we’re offering advice or receiving it.

When We See It Everywhere: The Frequency Illusion

The moment something enters our awareness, it starts showing up everywhere—not because the world changed, but because we did.

Have you ever thought about buying a particular car—maybe a model you hadn’t really noticed before—and then, suddenly, it feels like that car is everywhere? On the freeway. In parking lots. At stoplights. The world seems to fill up with them almost overnight, leaving you wondering if they were always there and you just never noticed.

That’s the frequency illusion, also called the Baader-Meinhof phenomenon. It happens when something we've just learned about or started paying attention to seems to appear with surprising frequency. It’s not that the thing itself has changed or become more common—it’s that our minds have been tuned to notice it.

The name Baader-Meinhof phenomenon comes from a reader of a Minnesota newspaper, who once described reading an article about the Baader-Meinhof Group—a far-left militant organization active in Germany in the 1970s—and then, shortly after, seeing another mention of the same group in an unrelated article. Before that moment, he had never heard of them. Afterward, it felt like references to Baader-Meinhof were everywhere. The story stuck, and now an unrelated German militant group lends its name to the experience of noticing something repeatedly after first encountering it.

What’s happening during the frequency illusion isn’t a change in the outside world; it’s a change in our awareness. Our brains are constantly filtering information, letting most of it pass by unnoticed. But once something enters our field of attention, it lights up. It feels more frequent, more important, and more visible—not because the world has shifted, but because we have.

I remember reading an article that emphasized the importance of interactivity in instructional design. It laid out strong arguments—how interactive elements boost engagement, comprehension, and retention—and the examples were vivid enough to stick with me long after I finished reading.

From that moment on, every course I looked at seemed like it was missing something if it didn’t include a drag-and-drop, a clickable hotspot, or a branching scenario. I started adding interactive components to nearly everything I worked on, assuming that more interactivity was always better.

But in hindsight, I had tunnel vision. I wasn’t wrong about the value of interactivity—but I was no longer asking when or why it made sense. I was just seeing it because it was top of mind.

This matters when we’re receiving advice—or giving it. Someone who has recently discovered a particular method, strategy, or idea might see it everywhere and believe it holds special significance. They aren’t trying to exaggerate or mislead anyone. Their mind has simply latched onto a pattern, and that pattern feels bigger and more urgent to them than it might actually be.

It’s easy to see this play out in personal conversations too. Maybe a friend recently learned about the importance of "setting healthy boundaries" in relationships—an important concept, no doubt. After learning about it, they might start seeing boundary issues everywhere, even in places where the real struggle isn’t boundaries at all. If you ask them for advice about a relationship problem, they might quickly suggest that your issue is a lack of boundaries, even if your situation is more about communication, trust, or timing. They aren't trying to give bad advice. It's simply that the idea of boundaries has become so prominent in their thinking that it now feels like the central issue in every situation they encounter.

When we’re on the receiving end, it’s worth remembering that the intensity with which someone talks about an idea doesn’t always reflect its true weight or relevance. Sometimes, it simply reflects what they’ve recently come to notice and value.

And when we find ourselves sharing advice, it’s worth pausing, too. Are we offering something because it truly fits the situation—or because it feels newly important to us?

Either way, it’s not a flaw. It’s part of how our minds are wired. What we notice, we value. And what we value, we tend to share.

Falsification Blindness

When people offer advice—especially advice rooted in their own experience—it’s natural for them to focus on the reasons why it should work. They often see all the ways it helped them or others they’ve known. And without realizing it, they can start to overlook the ways their advice might not fit a new situation. They may be experiencing Falsification Blindness.

The philosopher Karl Popper once pointed out that real strength doesn’t come from proving an idea right—it comes from the idea being able to survive serious attempts to prove it wrong. But in everyday life, especially when someone feels confident about what they’re sharing, the instinct to invite that kind of questioning often fades. The focus shifts from testing advice to defending it.

When advice doesn’t work as expected, it’s common to hear things like, "You must not have done it right," or, "You forgot an important step." The idea that the advice itself might not apply—or might not be the best fit—is often the last thing considered. Especially when the advice-giver has seen it work in the past, it can be difficult for them to imagine where it might fall short.

Experts are just as vulnerable to this as anyone else—sometimes even more so. The more success someone has had using a particular method or solution, the harder it can be for them to imagine the conditions where it might fail. Success reinforces their sense of certainty. And certainty, if left unchecked, can quietly narrow their openness to exceptions, nuances, or shifts in context.

I can see this same pattern in myself. There have been times when I gave advice with full confidence, only to later realize I had been so focused on how well it had worked for me that I didn’t leave enough room to wonder if it would work differently for someone else. When things didn’t go as expected, my first instinct was often to believe something must have been missed or misunderstood—not to consider that maybe my advice simply wasn't the right fit.

Reflecting on those moments, I realize how easy it is to protect the things we believe in, especially when those things have helped us. But real wisdom, I think, asks something harder: to stay curious even about the things we trust most. To be willing to ask, even quietly to ourselves, Where could this go wrong? Especially when it feels most certain.

The Armchair Quarterback Problem (Empathy Gaps)

It’s always easier to call the play from the couch—especially when you’re not the one who has to live with the outcome.

It’s easy to see things clearly from the sidelines. Watching a football game from a couch, it’s obvious what the quarterback should have done differently. Watching someone else’s conflict at work, it’s easy to see what they should have said. From a distance, mistakes look simple. Solutions look obvious.

But living those moments in real time—under stress, fear, uncertainty—is something else entirely. And even people with deep expertise, who genuinely want to help, can underestimate that difference. It's not that they don't care. It's that they aren't inside the moment.

And more than that, they aren't the ones who have to live with the consequences if the advice goes wrong. If staying calm during a panic attack fails, or if speaking up in a difficult meeting backfires, the emotional fallout doesn’t land on the person who suggested it. The risk is carried by the person trying to apply the advice—not by the person giving it.

Without realizing it, advice-givers often have no skin in the game. And that insulation, that empathy gap, can make advice feel much simpler, safer, and more obvious from their side of the conversation.

Experts, in particular, can fall into this pattern. Their familiarity with the solution, combined with their distance from the risk, can make hard things sound easy. Not because they are being careless or arrogant—but because the emotional cost isn’t immediately visible to them.

I think about this every time I remember a moment from my teenage years, sitting with friends as we played endless rounds of Tekken 3 on Playstation. I used to sit and watch others play, picking apart their decisions in my head—"Why didn’t you dodge that move?" "Why didn’t you block?" It all seemed so obvious from the outside. But the moment I picked up the controller myself, everything changed. Timing felt different. Pressure made simple moves feel clumsy. Choices that seemed obvious before became a blur of panicked reactions.

It’s humbling to realize how different things feel from the inside. And it's even more humbling to recognize how often I’ve given advice without fully considering that difference.

When someone gives advice from a place of emotional distance—and with no direct risk if it goes wrong—it’s not because they’re trying to dismiss the difficulty. It’s because, from their side, the difficulty and the danger are both invisible.

For me, it’s a reminder that real empathy isn’t just about wanting to help. It’s about remembering that clarity from the outside is not the same thing as clarity from within. And that sometimes, the hardest advice to follow is the one that sounds easiest from a distance.

Expertise Isn’t Broken, It’s Human

When advice falls short—when it feels confusing, overly complicated, misplaced, or impossible to apply—it’s tempting to blame the advice-giver. To assume they were careless. Or arrogant. Or out of touch.

But the patterns I've been exploring here—curse of knowledge, fixation bias, frequency illusion, falsification blindness, empathy gaps—aren’t failures of intelligence or caring. They’re part of how human minds work.

Even the most thoughtful experts, the most well-meaning mentors, the most experienced guides are still navigating the same cognitive habits and blind spots as everyone else. They're not immune to them just because they know more. In fact, sometimes, knowing more makes these blind spots even harder to see.

I see it in myself, too. I’ve fallen into these patterns without realizing it. I’ve given advice that made sense to me but missed the person in front of me. I’ve leaned on solutions that felt safe because they had worked before. I've forgotten how different things can feel when you're the one holding the weight of a decision.

And yet, I don't think the answer is to stop trusting experts—or to stop offering what we know to others. Maybe the answer is to trust a little differently. To hold advice gently instead of gripping it tightly. To stay curious about what we know, and humble about what we might still be missing. And to remember that offering knowledge is an act of hope, but listening—to the situation, to the person, to the reality in front of us—is just as much an act of wisdom.

Because expertise, like everything human, isn’t perfect. It doesn’t have to be. It just has to stay open.